Simple implementation of a meeting transcription solution locally

Introduction

In a recent engagement with a team that provides secretariat service, there is an opportunity to optimize the existing process of creating meeting minutes. In the current context there is a significant time spent in listening to recorded meeting captured on a voice recorder to ensure comprehensiveness of the points captured.

At some of these offline meetings, confidential issues and sensitive data are often shared. While recording these discussions is necessary for reference, sharing such recordings over the cloud or with third-party transcription services poses security risks. Leakage of confidential information could have severe consequences. As such, we decided to experiment with a local implementation rather than services available in the Cloud.

Based on our initial exploration of the pre-trained open source models available on Hugging Face Hub, we find that models from the Whisper family can be easily setup and has a high accuracy of transcription. We refer to the comparison of different Hugging Face models at their leaderboard page

Initial Setup

Installation and setup of ffmpeg

In order to use the Whisper family of transformer models, there is a need to install ffmpeg first. The steps to setup ffmpeg which I found most straightforward are as follows:

- Download installation package from the official site https://ffmpeg.org/download.html

- Follow the guide in this online article to setup ffmpeg https://www.wikihow.com/Install-FFmpeg-on-Windows

- Create a new environment for installation just to be safe

Generally, this should be sufficient. In case any additional python bindings might be required, I would typically check on libraries.io to find the packages based on a combination of most stars, highest source ranks and updated relatively recently if possible.

Setting up Pytorch, HuggingFace and loading the pre-trained transformers model

Install the pre-requisite python libraries required

- Pytorch installation: https://pytorch.org/

- Transformers: https://huggingface.co/docs/transformers/installation

Transcribe the audio with Whisper Models

First, we import the necessary libraries.

#Load Required Libraries

import torch

from transformers import AutoModelForSpeechSeq2Seq, AutoProcessor, pipelineSecond, we load the pre-trained model. For the purpose of testing, we load the smallest and fastest distil-whisper small model but the same codes can be used for other models under the Whisper family.

#Load pre-trained model

device = "cuda:0" if torch.cuda.is_available() else "cpu"

torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32

model_id = "distil-whisper/distil-small.en"

model = AutoModelForSpeechSeq2Seq.from_pretrained(

model_id, torch_dtype=torch_dtype, use_safetensors=True

)

model.to(device)

processor = AutoProcessor.from_pretrained(model_id)Third, we load in our sample file and generate the output.

pipe = pipeline(

"automatic-speech-recognition",

model=model,

tokenizer=processor.tokenizer,

feature_extractor=processor.feature_extractor,

max_new_tokens=128,

torch_dtype=torch_dtype,

device=device,

)

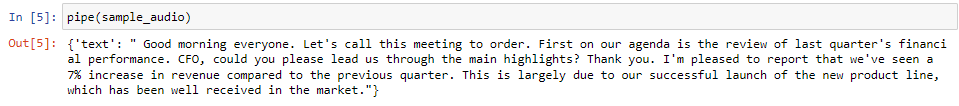

sample_audio = "Sample Board Recording.wav"

pipe(sample_audio)Here is how the sample output looks like based on a simple audio recording. Even based on the distil-small model, the transcription looks to be very accurate as least for the simple sample.

Conclusion and next steps

In this short article, we explored one of the simple ways to implement a meeting transcription solution through the use of pre-trained Whisper family of models available on Hugging Face. Further enhancements could include capabilities of speaker identification and fine-tuning in a more localized settings if the pre-trained models are not able to pick up on the local slangs.

There is also a good course on Hugging Face that delves into applications of transformers on audio data. https://huggingface.co/learn/audio-course/chapter0/introduction